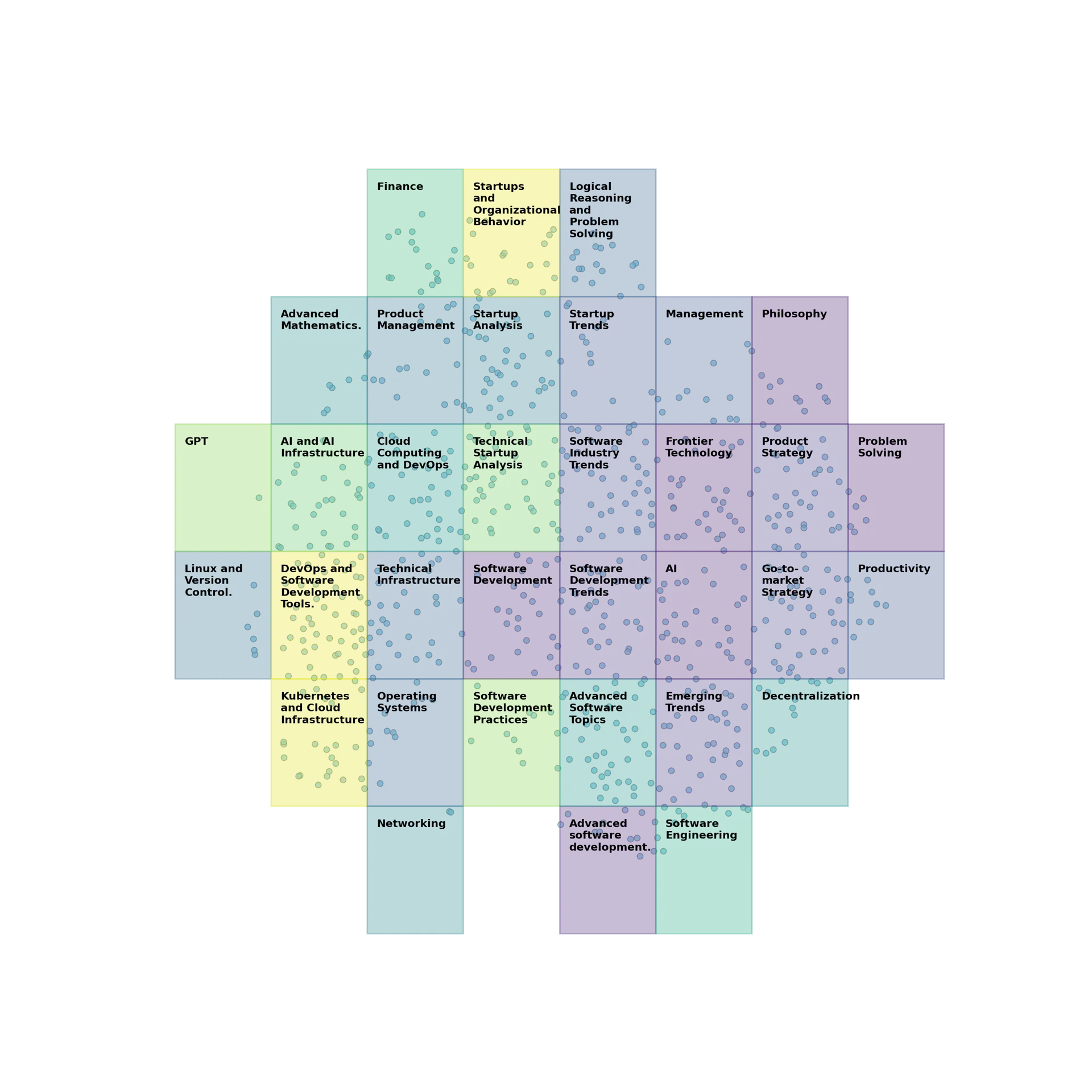

This is daily blog #730. Last year I visualized the hyperlinks between my posts (using virgo, my graph-based configuration language). This year, the embedding space of the last 730 posts.

- I embedded all my posts using BERT (a transformers model pre-trained on a large corpus of English data). BERT uses 768-dimensional vectors.

- Then I ran them through t-SNE (t-distributed stochastic neighbor embedding, a fancy way to visualize high-dimensional data by translating them to two dimensions.

- Finally, I separated the two-dimensional space into equally sized bins and asked GPT-3.5 to develop a category name for each set of post titles.

I cleaned up a few titles that were too long for the display, but that’s about it. The code and data are on GitHub at r2d4/blog-embeddings.

Of course, there’s a lot missing when the dimensionality is reduced to only two, but there are some interesting insights.

The topics range from highly technical on the bottom left (Kubernetes and Cloud Infrastructure) to more meta topics on the top right (philosophy, problem-solving). There’s roughly equal distribution of posts across the four quadrants.