Researchers have built text-to-image models to generate photorealistic images from only a text prompt. And they look very convincing.

From Google's Imagen

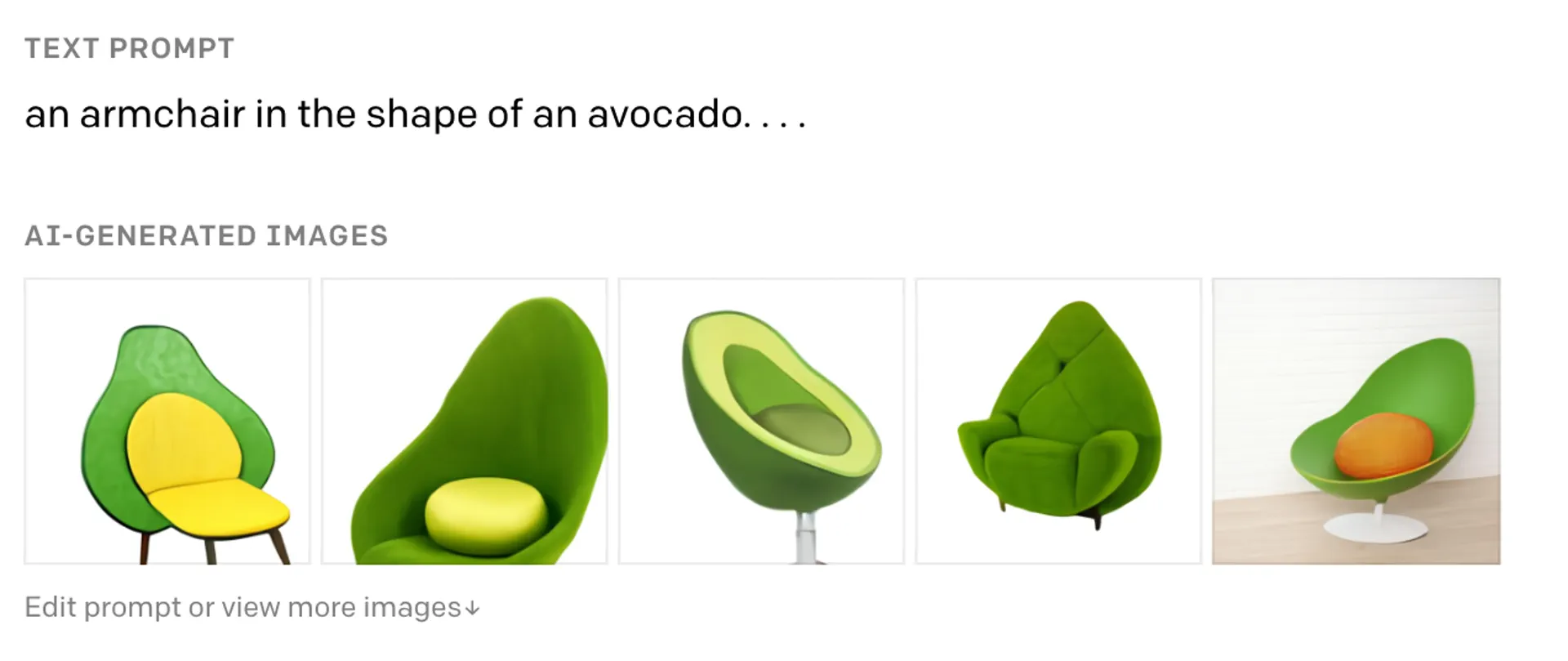

From DALL-E

The first model released was DALL-E by Open AI - a 12-billion parameter version of GPT-3. Google quickly followed up with their own model, Imagen by Google Research, which they claim tested better among human reviewers than comparable models.

They are both diffusion models. Diffusion models work by progressively adding noise to the training data until it is all noise. Then, it attempts to reverse the process, adding details until it can reproduce a noise-less sample. You can read a more in-depth summary of the class of models on Google's AI Blog.

The research findings from the diffusion models are interesting

- Uncurated user-generated data from the web continues to be useful for a wide variety of models

- Increasing the text-only language model is more effective than increasing the image model, i.e., more text data goes a long way in training the model (better text-image alignment, better images).

- More parameters, better model (even at an enormous scale)

These models are exciting, and it will be interesting to see what use cases people come up with. Much like how AI-powered copyrighting didn't displace marketers, text-to-image models will be an asset and tool to creatives. I imagine just-in-time illustrations for books and engaging illustrations for almost every website.