There are two ways of constructing a software design:

One way is to make it so simple that there are obviously

no deficiencies, and the other way is to make it so

complicated that there are no obvious deficiencies. The

first method is far more difficult. It demands the same

skill, devotion, insight, and even inspiration as the

discovery of the simple physical laws which underlie the

complex phenomena of nature.

– Tony Hoare, Turing Award 1980

Programmers often think of simplicity as a means to correctness: a difficult yet noble path to complete and air-tight abstractions. Tony Hoare is a famous computer scientist (winner of the 1980 Turing Award, the highest achievement in computer science) that spent many years working on formal methods and program verification.

While many of his ideas have had a substantial impact (CSP, which influenced the concurrency patterns in languages like Go), many of his methods have proven too challenging to find widespread adoption in broader programming. His most used contribution might be one he's least proud of – he invented the null reference in 1965.

I call it my billion-dollar mistake. It was the invention of the null reference in 1965. At that time, I was designing the first comprehensive type system for references in an object oriented language (ALGOL W). My goal was to ensure that all use of references should be absolutely safe, with checking performed automatically by the compiler. But I couldn't resist the temptation to put in a null reference, simply because it was so easy to implement. This has led to innumerable errors, vulnerabilities, and system crashes, which have probably caused a billion dollars of pain and damage in the last forty years.

– Tony Hoare

Null lets programmers go faster and fill in the blanks. Sure, some (maybe all) null pointers could have been replaced with proper mitigation. But null was easy and practical.

While Hoare detested his discovery of null (just as G.H. Hardy believed that his only valuable work was in pure mathematics), it illuminates a different, more practical school of programming thought. One that does not view simplicity as a means to correctness but elevates simplicity to a goal more important than correctness.

The Null Programming Philosophy: Simplicity over Completeness.

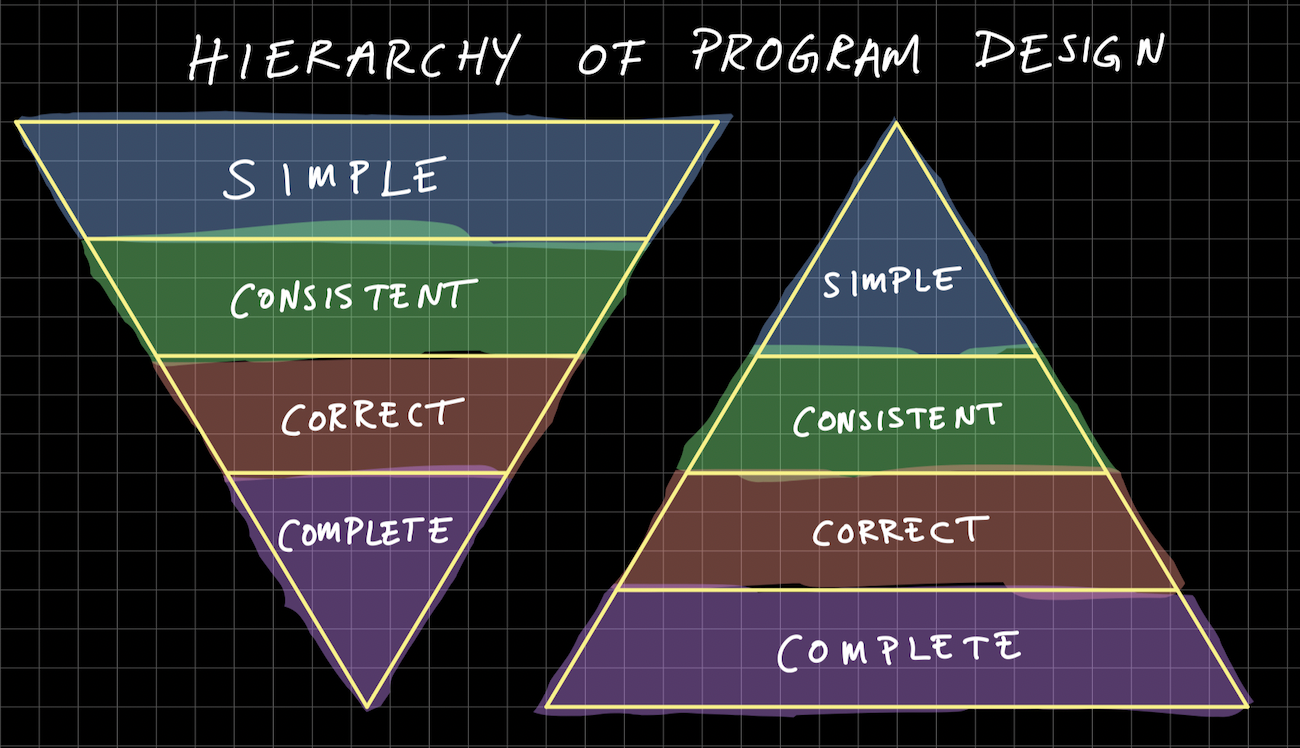

The traditional way of thinking about program design is the pyramid on the right.

The program must satisfy the long-tail of use cases and edge cases. Furthermore, the program must be correct, even if this means inconsistent inputs or outputs or complex implementations. Finally, the programmer must make the interface as simple as possible given the other constraints (completeness, correctness, consistency, in that order).

The Null Programming Philosophy (inspired by Hoare's "hack") flips the hierarchy upside down. It says that a simple program is better than a correct one. Design a simple interface with a simple implementation. A program might not cover all edge cases. A correct feature might be excluded because it requires an inconsistent input or output compared to the rest (against simplicity).

This tradeoff goes against our intuition, but technologies that we believe to be designed on the "right" side of the pyramid (completeness over simplicity) were products of the quick-and-dirty side of the pyramid (simplicity over completeness). For example, Unix, C, and TCP/IP fit the Null Programming Philosophy.

In the extreme, neither solution works. A simple yet consistently incorrect program is worthless. On the other hand, a correct but impossible-to-implement program is just theory. But if we are to err, we might as well choose simplicity over correctness (even the best abstractions are leaky).